To unlock the Mission Guild or unlock certain amenities for your Headquarters, you’ll need Dire Beastbone. Here’s how to get Dire Beastbone in Eiyuden Chronicle: Hundred Heroes.

Where to Find Dire Beastbone in Eiyuden Chronicle: Hundred Heroes

Dire Beastbone is found in two locations in Eiyuden Chronicle: Hundred Heroes: in the second section of Bounty Hill and The Den of the Dunes in The Great Sandy Sea. To find the rare material, look for bones on the floor that sparkle – this indicates a resource spot. Interact with it, and you’ll have the chance to get some Dire Beastbone. You’ll likely end up with a few Pelts, too, as you search, which you also need for your Headquarters.

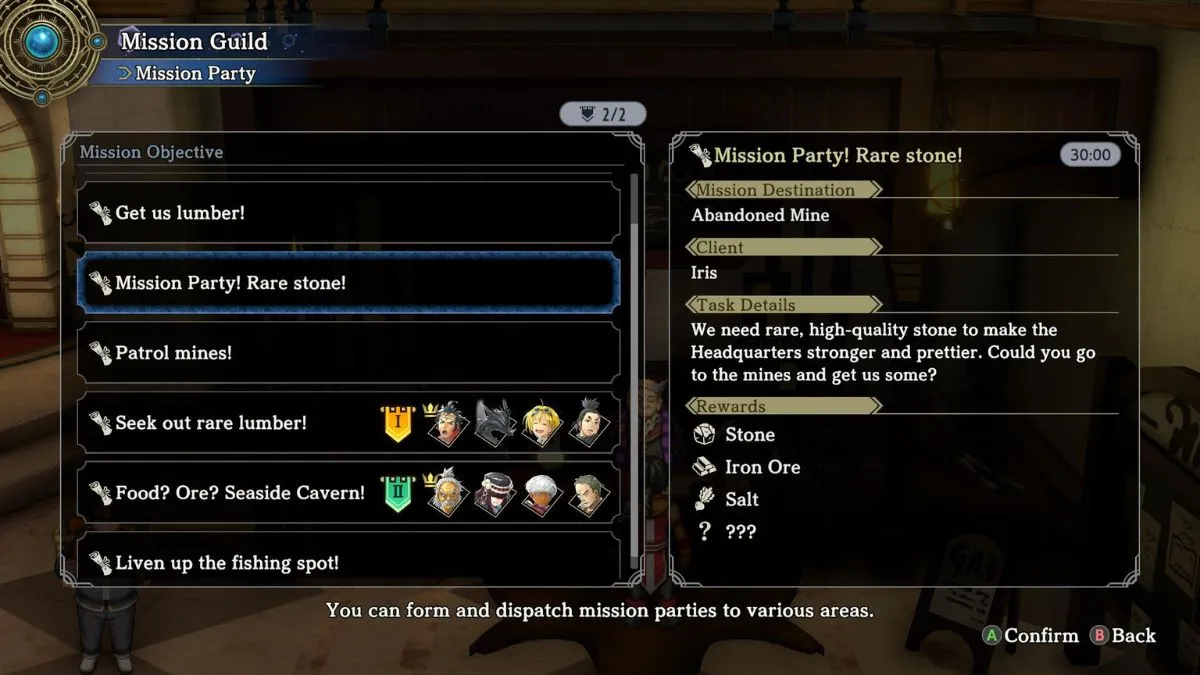

Alternatively, you can get Dire Beastbone from the Mission Guild at your Headquarters, though this requires you to get your hands on the material first, as you need it to build the Mission Guild in the first place once you reach Headquarters Level 2.

To farm Dire Beastbone using the Mission Guild, assign a team of four to the “Patrol mines!” mission objective, then wait 30 minutes in real time for the squad to return. Chances are, they’ll bring back at least two of the resource. You can increase your yield by assigning a hero with the “Increase Stone Acquisition” or “Increase Party Foraging” skill to the first slot.

Best Mission Guild Characters to Get Dire Beastbone

The heroes with the Increase Stone Acquisition or Increase Party Foraging skill are:

- Durlan – Increase Stone Acquisition.

- Hildi – Increase Party Foraging.

- Sabine – Increase Party Foraging.

- Zabi – Increase Party Foraging.

- Francesca – Increase Party Foraging.

- Barnard – Increase Party Foraging.

- Gieran – Increase Party Foraging.

- Melridge – Increase Party Foraging.

- Hakugin – Increase Party Foraging.

- Marin – Increase Party Foraging.

- Leon – Increase Party Foraging.

- Quinn – Increase Party Foraging.

- Chandra – Increase Party Foraging.

- Lilwn – Increase Party Foraging.

- Euma – Increase Party Foraging.

- Rudy – Increase Party Foraging.

- Lam – Increase Party Foraging.

How to Use Dire Beastbone in Eiyuden Chronicle: Hundred Heroes

Dire Beastbone is used to construct certain buildings at your Headquarters. Here is a list of every project that requires Dire Beastbone in Eiyuden Chronicle: Hundred Heroes:

- Build Mission Guild – 1 Dire Beastbone, 200 Food, 40 Pelt.

- Fishing Rod Upgrade 1 – 4 Dire Beastbone, 3 Mystic Lumber.

- Stables: Egg Rarity 1 – 5 Dire Beastbone.

- Fishing Spot: Productivity 2 – 5 Dire Beastbone.

- Fishing Rod Upgrade 2 – 5 Dire Beastbone, 4 Excellent Lacquer.

- Fishing Spot: Productivity 3 – 6 Dire Beastbone.

- Build Mysterious Room – 3 Dire Beastbone, 4 Excellent Lacquer, 5 Platinum Ore.

Eiyuden Chronicle: Hundred Heroes is available now on PC, PlayStation 5, Xbox Series X|S, PlayStation 4, Xbox One, and Nintendo Switch.