Updated May 3, 2024

Checked for more codes!

Recommended Videos

If the thrill of racing gives you the kick, this is the Roblox title that will push all the right buttons for you. Feel the adrenaline rush as you leave your opponents in the dust. If you want the fastest wheels, Midnight Chasers: Highway Racing codes will help!

All Midnight Chasers: Highway Racing Codes List

Midnight Chasers: Highway Racing Codes (Active)

- ThanksFor15000: Use for 20k Cash

- ThxFor5Mil!: Use for 30k Cash

- GeneralKiko: Use for 10k Cash

Midnight Chasers: Highway Racing Codes (Expired)

- MobileBack!

- BigUpdate!

- ThanksFor5750

- Race!

- ThanksFor5250

- CarsUpdate

- ThanksFor100

- ThanksFor9850

- NewMap!

- Release!

- ThanksFor8250

- ThanksFor3000

- ThxFor3Mil

- Update

- ThanksFor6750

- HappyNewYear!

- ThanksFor3000

Related: Drag Project Codes

How to Redeem Codes in Midnight Chasers: Highway Racing

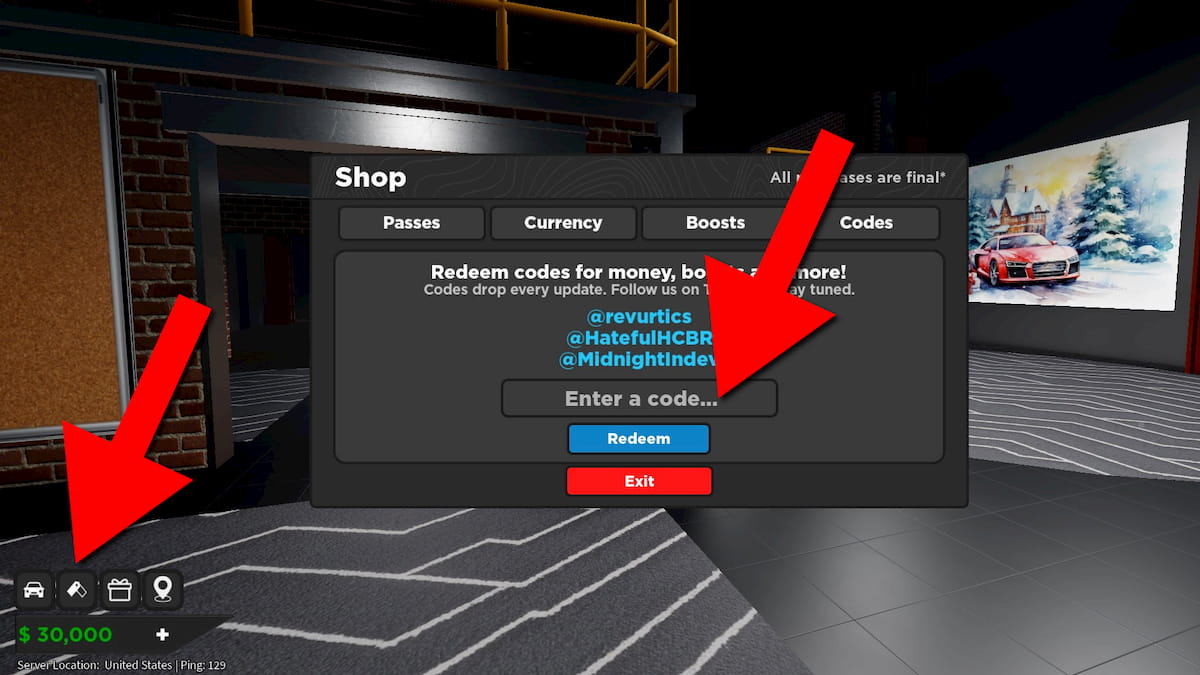

Redeeming Midnight Chasers: Highway Racing codes is simple—follow these steps:

- Run Midnight Chasers: Highway Racing in Roblox.

- Click on the price tag icon in the bottom-left corner.

- Go to the Codes tab.

- Input a working code into the Enter a code field.

- Click on Redeem to grab your freebies!

If you’re a fan of Roblox driving games, check out our articles on The Ride codes and MotoRush codes, and grab all the free rewards before they’re gone!

The Escapist is supported by our audience. When you purchase through links on our site, we may earn a small affiliate commission. Learn more