The Cherished Fracture is the final piece, forgive the pun, in the Forgotten Kingdom’s main quest. But this is Remnant 2, so of course it has multiple uses. Here is every outcome for the Cherished Fracture in Remnant 2.

What Should You Do with the Cherished Fracture in Remnant 2

When you finally obtain the Cherished Fracture in the Luminous Vale, you’ll be shuffled along almost immediately to the Bloodless Throne. And you could give Lydusa the fracture here, but it’s not your only option. Here’s every outcome for the Cherished Fracture in Remnant 2.

Giving Lydusa the Cherished Fracture in the Bloodless Throne

If you read the item description of the Cherished Fracture, you’ll find it incomplete. But that doesn’t mean you can’t give it to Lydusa immediately. However, because it’s incomplete, Lydusa will go absolutely insane, and you’ll be forced to fight her wrathful, broken avatar.

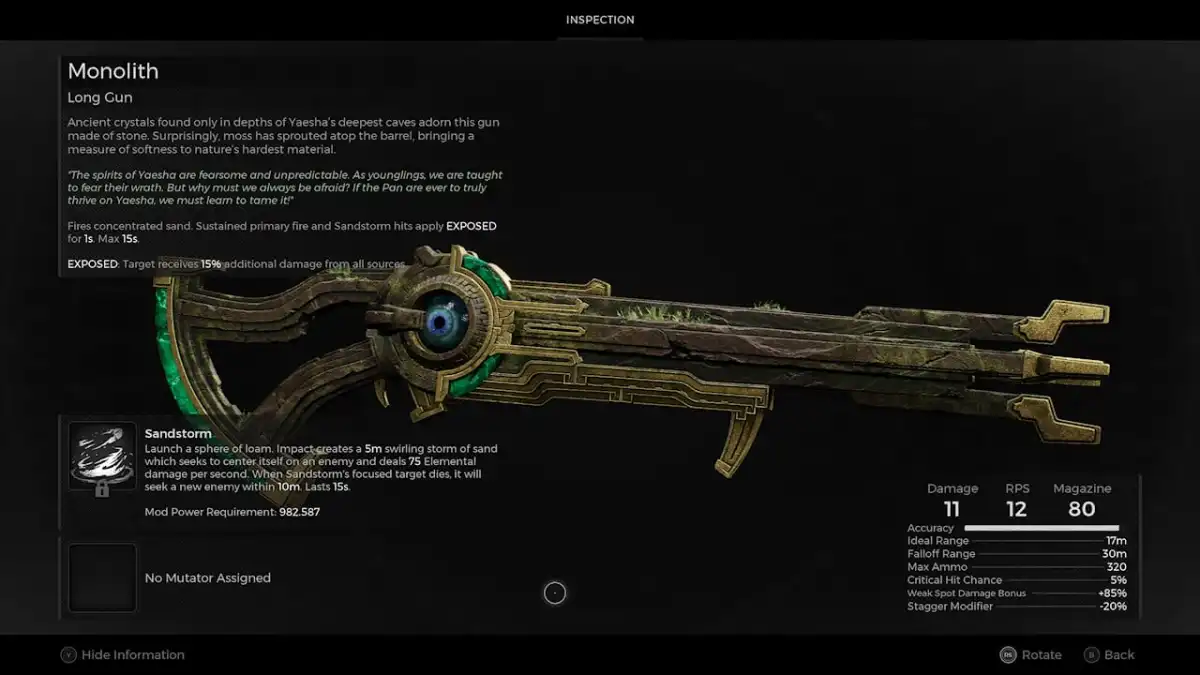

But it’s a great, dynamic fight, and one of my favorite in the DLC. Once you’ve defeated Lydusa, you’ll get the Eye of Lydusa. Proving that even in death, we’re more than happy to take from her. Guess she was right about us after all!

The Eye of Lydusa can then be crafted into the Monolith, a Long Gun with intense accuracy and a mod that creates an area of effect sandstorm that clings to one enemy and moves on to the next once that enemy is dead. Excluding the Polygun, it’s the best new weapon in Forgotten Kingdom.

Additionally, if you’ve been talking to Walt at each mural, you can get the Burden of Mesmer ring for a mere 1,000 scraps by opting to kill Lydursa. Usually, this ring is 100,000 scrap, so it’s a pretty big discount! However, if you’ve been ignoring Walt you can’t tell him that you’ve slain the Goddess. Ask me how I know.

Giving Lydusa the Cherished Fracture in Goddess Rest

If you want the “good” ending, or at least the ending that feels fuller, the best outcome for the Cherished Fracture in Remnant 2 is giving it to Lydusa in Goddess’s Rest. Goddess’s Rest is full of secrets and it’s also the place where she and Thalos spent their happiest days.

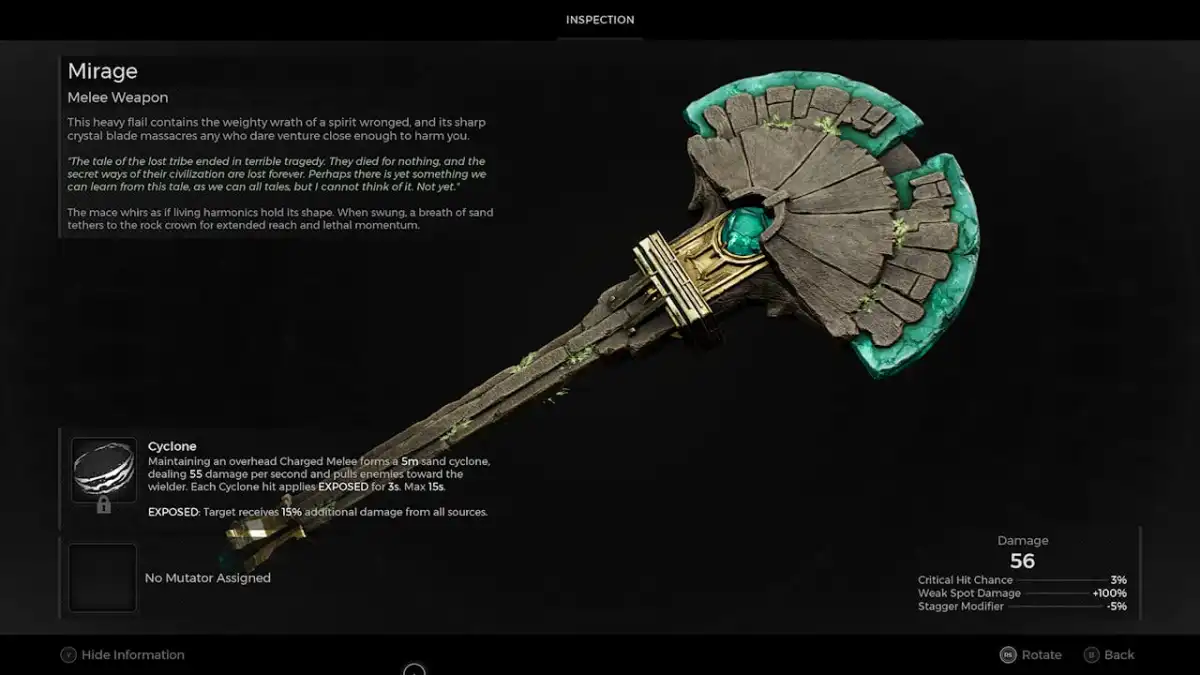

Simply place the Cherished Fracture in the gold bowl in the temple behind her mural and Lydusa will regenerate, fully formed. You’ll skip her boss fight and she’ll give you a crafting item that will allow you to make Mirage.

Mirage is a fun melee weapon that you can spin over your head to create a small area-of-effect cyclone. Thematically, it pairs well with Monolith. Of course, there’s really nothing stopping you from playing this adventure twice and getting every outcome for the Cherished Fracture in Remnant 2, which I highly recommend.

Remnant 2 is available now on PC, PlayStation 5, and Xbox Series X|S.